Research

I build learning systems shaped by biological constraints—developmental curricula, active sensing, recurrent computation, and continual adaptation—and validate them against behavior and neural measurements.

Developmental constraints on learning

Infant visual diet

Inspired by the Adaptive Initial Degradation hypothesis, we trained ANNs with a graded coarse-to-fine image diet and found strongly shape-biased classification behavior, plus distortion/adversarial robustness.

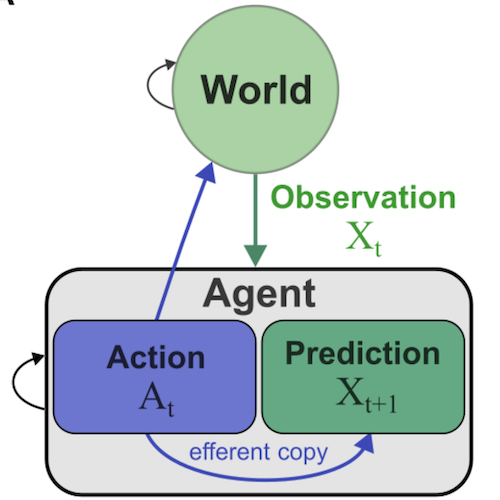

Active perception and world modeling

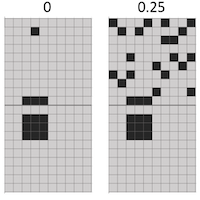

Sequence model interpretability

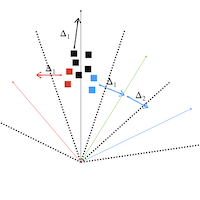

Predicting the next input yields representations that encode relational structure amongst the inputs: a RNN path integrates and binds the input tokens to their absolute locations in 2D scenes, in-context.

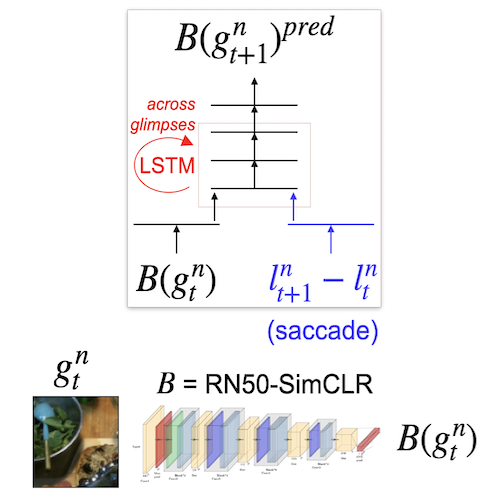

Scene representation

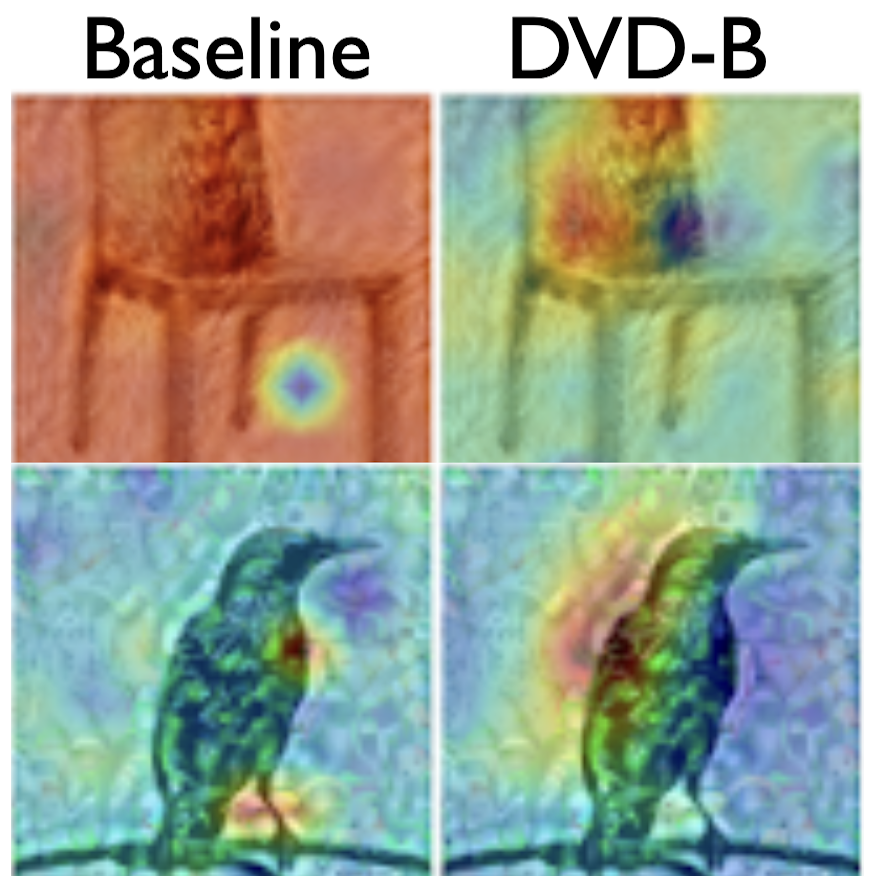

Predicting the next glimpse features (given the saccade) coaxes a network to encode co-occurrence and spatial arrangement in a visual-cortex-aligned scene representation.

Controlling attention

In cluttered object tracking with RL, an agent learns an explicit encoding of attentional state (an “attention schema”), most useful when attention cannot be inferred from the stimulus.

Adaptation and cognitive flexibility

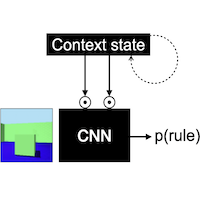

Rule inference in NNs

Inspired by Hummos, we built an image-based Wisconsin Card Sorting Task variant and found behavior suggesting sparks of cognitive flexibility: compositional rule inference in activity space.

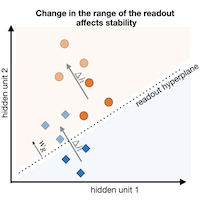

Continual learning and drift

Readout misalignment due to learning-induced drift is a core continual-learning problem. Constraining drift to the readout null-space helps networks stay both stable and plastic.

Feedback and recurrent computation

Representational dynamics in RCNNs

Decluttering due to recurrence

Recurrent flow carries category-orthogonal feature information (e.g., location) used iteratively to constrain subsequent category inferences.

Attention, search, and selection in scenes

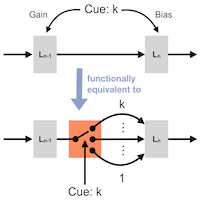

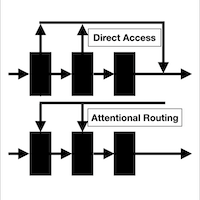

Modulation as routing

Attentional routing can push task-relevant information through the network as effectively as “direct access,” challenging claims about the necessity of direct access for explaining behavior.

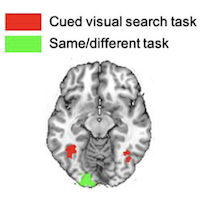

Task-dependence of visual representations

The link between multi-object displays and isolated-object representations is task-dependent: same/different relates earlier; object search relates later in MEG/fMRI.

Characterising search templates

Search templates encode identity and size; size is inferred from location in scenes, and is entangled with identity in the template.

Implicitly learning distractor co-occurrence

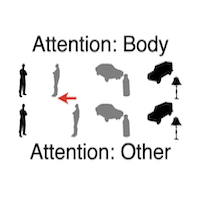

High-level feature-based attention

Feature-based attention modulates fMRI representations of body silhouettes presented in task-irrelevant locations in high-level visual cortex.

Attentional modulation in NNs

Scene context

Scene co-variation biases categorization, but across 4 experiments we found no evidence that task-irrelevant scenes boost sensitivity for detecting co-varying objects.

Characterizing perception

Ventral stream organization

Animacy organization is not fully driven by visual-feature differences; it also depends on inferred factors like agency quantified behaviorally.

Priors in perceptual report

In a Sperling-like task, people report occasionally-present and absent inverted letters as upright to the same extent; expectation-driven illusions may be post-perceptual.

Engineering computational systems

Brain ↔ language interface

fMRI responses to natural scenes condition word generation in a Transformer, enabling flexible readout of semantic properties like object class and numerosity.

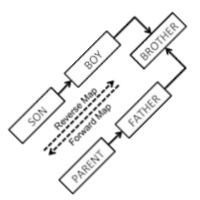

NLP / graph search

Reverse dictionary via n-hop reverse search on a definition graph. Matches SOTA on ~3k lexicon; doesn’t scale well to ~80k.

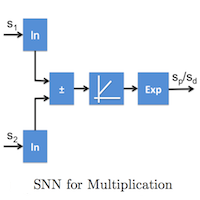

SNN control

Model-based control for velocity–waypoint navigation; plus modular SNNs for real-time arithmetic using plastic synapses.